The error handler invokes error diagnostic routines and also generates error messages. It works in conjunction with all the phases to handle the errors at the respective phases.

The token can be defined as a meaningful group of characters over the character set of the programming language like identifiers, keywords, constants and others.

A Symbol Table is a data structure containing a record for each identifier, with fields for the attributes of the identifier.

Tokens

The token can be defined as a meaningful group of characters over the character set of the programming language like identifiers, keywords, constants and others.

Patterns

A set of strings in the input for which the same token is produced as output. This set of strings is described by a rule called a pattern associated with the token.

Lexemes

A lexeme is a sequence of characters in the source program that matches the pattern for a token and is identified by the lexical analyzer as an instance of that token.

A Complier is a program that reads a program written in one language-the source language-and translates it in to an equivalent program in another language-the target language . As an important part of this translation process, the compiler reports to its user the presence of errors in the source program.![]()

![]()

Mention few cousins of compiler. May/June 2012

The following are the cousins of compilers

The two main parts are ![]()

![]()

Analysis part breaks up the source program into constituent pieces and creates An ![]()

![]() intermediate representation of the source program.

intermediate representation of the source program.![]()

![]()

Synthesis part constructs the desired target program from the intermediate Representation

List four software toolsthat analyses thesource program. May/June 2006

S.No |

Parse tree |

Syntax tree |

interior nodes are non-terminals, leaves are terminals |

interior nodes are “operators”, leaves are operands |

|

rarely constructed as a data structure |

when representing a program in a tree structure |

|

Represents the concrete syntax of a program |

Represents the abstract syntax of a program (the |

|

|

A syntax tree is often called abstract syntax tree or AST |

Program: a + b * c

A Loader is a program that performs the two functions ![]()

![]()

i. Loading

![]()

![]() ii .Link editing

ii .Link editing![]()

![]()

The process of loading consists of taking relocatable machine code, altering the relocatable address and placing the altered instructions and data in memory at the proper locations.

![]()

![]() A preprocessor is one, which produces input to compilers. A source program may be divided into modules stored in separate files. The task of collecting the source program is sometimes entrusted to a distinct program called a preprocessor.

A preprocessor is one, which produces input to compilers. A source program may be divided into modules stored in separate files. The task of collecting the source program is sometimes entrusted to a distinct program called a preprocessor.![]()

![]()

![]()

![]() The preprocessor may also expand macros into source language statements.

The preprocessor may also expand macros into source language statements.![]()

![]()

Skeletal source program

![]()

![]()

Preprocessor

![]()

![]()

Source program![]()

![]()

![]()

![]()

![]()

![]() Assembler is a program, which converts the assembly language in to machine language.

Assembler is a program, which converts the assembly language in to machine language.

Interpreter is a program which converts source language into machine language line by line.![]()

![]()

![]()

![]()

![]()

![]() What is a lexeme? Define a regular set. APRIL/MAY 2011

What is a lexeme? Define a regular set. APRIL/MAY 2011

A Lexeme is a sequence of characters in the source program that is matched by the pattern for a token.A language denoted by a regular expression is said to be a regular set.

What is a sentinel? What is its purpose? April/May 2010

A Sentinel is a special character that cannot be part of the source program. Normally we use “eof” as the sentinel. This is used for speeding-up the lexical analyzer.

Regular expression is a method to describe regular Language Rules:![]()

![]()

What are the possible error recovery actions in lexical analyzer? May/June 2012

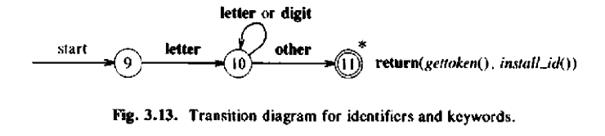

How to Precisely Match Strings to Tokens

How to Implement a Lexical Analyzer

The grammar is mainly used for parsing only - the key is to resolve all ambiguities. This grammar is called Concrete Syntax.

• Abstract Syntax is used to characterize the essential structure of the program -

the key is to be as simple as possible; it may contain ambiguities.

• Traditional Compilers do semantic analysis on Concrete Syntax

• Modern Compilers do the semantic analysis on Abstract Syntax tree

An interpreter is a translator used to convert high level program into machine code. These translators translate the program line by line. A Compiler is also a translator which is used to translate the whole source of a program at a time.

Lexical analyzer will detect the tokens from the source language with the help of input buffering. The reason is, the lexical analyzer will scan the input character by character, it will increase the cost file read operation.. So buffering is used. The buffer is a pair in which each half is equal to system read command.

Token structure is specified with the help of Pattern. The pattern can be described with the help of Regular Expression

Its main task is to read input characters and produce as output a sequence of tokens that parser uses for syntax analysis. Additionally task is removing blank, new line and tab characters

Regular expressions are used for specifying the token structure.

1. Simplicity of design

2. Compiler efficiency is improved

3. Compiler portability is enhanced

Write the regular expression for identifier and Number.Nov/Dec 2012

PART –B

With a neat block diagram,explain the various phases of a compiler in detail.Assuming an expression give the output of each phase.(10) Nov/Dec 2006

What are the phases of a compiler. Explain each phase in detail.Write down the output of each phase for the expression a:=b+c*50.(10)Nov/Dec 2004,April/May 2005,April/May 2011, April/May 2012,May/June 2013

What are the various phases of the compiler? Explain each phase in detail. May/June 2012

Explain in detail about phases of compiler and translate the statement pos=init+rate*60. Nov/Dec 2012

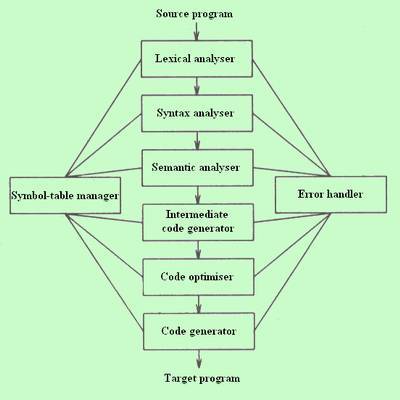

The different phases of a compiler

Conceptually, a compiler operates in phases, each of which transforms the source program from one representation to another.

The first three phases, forms the bulk of the analysis portion of a compiler. Symbol table management and error handling, are shown interacting with the six phases.

Symbol table management

An essential function of a compiler is to record the identifiers used in the source program and collect information about various attributes of each identifier. A symbol table is a data structure containing a record for each identifier, with fields for the attributes of the identifier. The data structure allows us to find the record for each identifier quickly and to store or retrieve data from that record quickly. When an identifier in the source program is detected by the lex analyzer, the identifier is entered into the symbol table.

Error Detection and Reporting

Each phase can encounter errors. A compiler that stops when it finds the first error is not as helpful as it could be. The syntax and semantic analysis phases usually handle a large fraction of the errors detectable by the compiler. The lexical phase can detect errors where the characters remaining in the input do not form any token of the language. Errors when the token stream violates the syntax of the language are determined by the syntax analysis phase. During semantic analysis the compiler tries to detect constructs that have the right syntactic structure but no meaning to the operation involved.

The Analysis phases

As translation progresses, the compiler’s internal representation of the source program changes. Consider the statement,

position := initial + rate * 10

The lexical analysis phase reads the characters in the source pgm and groups them into a stream of tokens in which each token represents a logically cohesive sequence of characters, such as an identifier, a keyword etc. The character sequence forming a token is called the lexeme for the token. Certain tokens will be augmented by a ‘lexical value’. For example, for any identifier the lex analyzer generates not only the token id but also enter s the lexeme into the symbol table, if it is not already present there. The lexical value associated this occurrence of id points to the symbol table entry for this lexeme. The representation of the statement given above after the lexical analysis would be:

id1: = id2 + id3 * 10

Syntax analysis imposes a hierarchical structure on the token stream, which is shown by syntax trees.

Intermediate Code Generation

After syntax and semantic analysis, some compilers generate an explicit intermediate representation of the source program. This intermediate representation can have a variety of forms.

In three-address code, the source pgm might look like this,

temp1: = inttoreal (10)

temp2: = id3 * temp1

temp3: = id2 + temp2

id1: = temp3

Code Optimisation

The code optimization phase attempts to improve the intermediate code, so that faster running machine codes will result. Some optimizations are trivial. There is a great variation in the amount of code optimization different compilers perform. In those that do the most, called ‘optimising compilers’, a significant fraction of the time of the compiler is spent on this phase.

Code Generation

The final phase of the compiler is the generation of target code, consisting normally of relocatable machine code or assembly code. Memory locations are selected for each of the variables used by the program. Then, intermediate instructions are each translated into a sequence of machine instructions that perform the same task. A crucial aspect is the assignment of variables to registers.

Explain the various Compiler Construction Tools. (Page No.22) April/May 2011,

April/May 2012

COMPILER CONSTRUCTION TOOLKITS

The compiler writer, like any software developer, can profitably use modern software development environments containing tools such as language editors, debuggers, version managers, profilers, test harnesses, and so on. In addition to these general software-development tools, other more specialized tools have been created to help implement various phases of a compiler. These tools use specialized languages for specifying and implementing specific components, and many use quite sophisticated algorithms. The most successful tools are those that hide the details of the generation algorithm and produce components that can be easily integrated into the remainder of the compiler. Some commonly used compiler-construction tools include

1. Parser generatorsthat automatically produce syntax analyzers from a grammatical description of a programming language.

2. Scanner generatorsthat produce lexical analyzers from a regular-expression description of the tokens of a language.

3. Syntax-directed translation enginesthat produce collections of routines for walking a parse tree and generating intermediate code.

4. Code-generator generatorsthat produce a code generator from a collection of rules for translating each operation of the intermediate language into the machine language for a target machine.

5. Data-flow analysis enginesthat facilitate the gathering of information about how values are transmitted from one part of a program to each other part. Data-flow analysis is a key part of code optimization.

6. Compiler-construction toolkitsthat provide an integrated set of routines for constructing various phases of a compiler

The following are the cousins of compilers

INPUT BUFFERING

During lexical analyzing , to identify a lexeme , it is important to look ahead atleast one additional character. Specialized buffering techniques have been developed to reduce the amount of overhead required to process a single input character

An important scheme involves two buffers that are alternatively reloaded

Each buffer is of the same size N, and N is usually the size of a disk block, e.g., 4096 bytes. Using one system read command we can read N characters into a buffer, rather than using one system call per character. If fewer than N characters remain in the input file, then a special character, represented by eof marks the end of the source file and is different from any possible character of the source program.

Two pointers to the input are maintained:

I. Pointer lexemeBegin, marks the beginning of the current lexeme, whose extent we are attempting to determine.

2. Pointer forward scans ahead until a pattern match is found; the exact strategy whereby this determination is made will be covered in the balance of this chapter.

Once the next lexeme is determined, forward is set to the character at its right end. Then, after the lexeme is recorded as an attribute value of a token returned to the parser, 1exemeBegin is set to the character immediately after the lexeme just found. In Fig. 3.3, we see forward has passed the end of the next lexeme, ** (the Fortran exponentiation operator), and must be retracted one position to its left.

Advancing forward requires that we first test whether we have reached the end of one of the buffers, and if so, we must reload the other buffer from the input, and move forward to the beginning of the newly loaded buffer. As long as we never need to look so far ahead of the actual lexeme that the sum of the lexeme's length plus the distance we look ahead is greater than N, we shall never overwrite the lexeme in its buffer before determining it.

Sentinels

If we use the scheme of Section 3.2.1 as described, we must check, each time we advance forward, that we have not moved off one of the buffers; if we do, then we must also reload the other buffer. Thus, for each character read, we make two tests: one for the end of the buffer, and one to determine what character

is read (the latter may be a multiway branch). We can combine the buffer-end test with the test for the current character if we extend each buffer to hold a sentinel character at the end. The sentinel is a special character that cannot be part of the source program, and a natural choice is the character eof. Figure 3.4 shows the same arrangement as Fig. 3.3, but with the sentinels added. Note that eof retains its use as a marker for the end of the entire input. Any eof that appears other than at the end of a buffer means that the input is at an end. Figure 3.5 summarizes the algorithm for advancing forward. Notice how the first test, which can be part of a multiway branch based on the character pointed to by forward, is the only test we make, except in the case where we actually are at the end of a buffer or the end of the input.

As the first phase of a compiler, the main task of the lexical analyzer is to read the input characters of the source program, group them into lexemes, and produce as output a sequence of tokens for each lexeme in the source program. The stream of tokens is sent to the parser for syntax analysis. It is common for the lexical analyzer to interact with the symbol table as well. When the lexical analyzer discovers a lexeme constituting an identifier, it needs to enter that lexeme into the symbol table. In some cases, information regarding the These interactions are suggested in Fig. 3.1. Commonly, the interaction is implemented by having the parser call the lexical analyzer. The call, suggested by the getNextToken command, causes the lexical analyzer to read characters from its input until it can identify the next lexeme and produce for it the next token, which it returns to the parser.

Since the lexical analyzer is the part of the compiler that reads the source text, it may perform certain other tasks besides identification of lexemes.

One such task is stripping out comments and whitespace (blank, newline, tab, and perhaps other characters that are used to separate tokens in the input).

Another task is correlating error messages generated by the compiler with the source program. For instance, the lexical analyzer may keep track of the number of newline characters seen, so it can associate a line number with each error message.

In some compilers, the lexical analyzer makes a copy of the source program with the error messages inserted at the appropriate positions. If the source program uses a macro-preprocessor, the expansion of macros may also

be performed by the lexical analyzer.

error recovery actions:

Token:

A token is a pair consisting of a token name and an optional attribute value. The token name is an abstract symbol representing a kind of lexical unit, e.g., a particular keyword, or a sequence of input characters denoting an identifier. The token names are the input symbols that the parser processes. In what follows, we shall generally write the name of a token in boldface. We will often refer to a token by its token name.

Pattern:

A pattern is a description of the form that the lexemes of a token may take. In the case of a keyword as a token,the pattern is just the sequence of characters that form the keyword. For identifiers and some other tokens,

the pattern is a more complex structure that is matched by many strings.

Lexeme:

A lexeme is a sequence of characters in the source program that matches the pattern for a token and is identified by the lexical analyzer as an instance of that token.

1.Tokens are treated as terminal symbols in the grammar for the source language using boldface names to represent tokens.

2.The lexemes matched by the pattern for the tokens represent the strings of characters in the source program that can be treated together as a lexical unit

3.In most of the programming languages keywords, operators identifiers , constants , literals and punctuation symbols are treated as tokens.

4. A pattern is a rule describing the set of lexemes that can represent a particular token in the source program.

5. In many languages certain strings are reserved i.e their meanings are predefined and cannot be changes by the users

6. If the keywords are not reserved then the lexical analyzer must distinguish between a keyword and a user-defined identifier

ATTRIBUTES FOR TOKENS:

When more than one lexeme can match a pattern, the lexical analyzer must provide the subsequent compiler phases additional information about the particular lexeme that matched. For example, the pattern for token number matches both 0 and 1, but it is extremely important for the code generator to know which lexeme was found in the source program. Thus, in many cases the lexical analyzer returns to the parser not only a token name, but an attribute value that describes the lexeme represented by the token; the token name influences parsing decisions, while the attribute value influences translation of tokens after the parse. We shall assume that tokens have at most one associated attribute, although this attribute may have a structure that combines several pieces of information. The most important example is the token id, where we need to associate with

The token a great deal of information. Normally, information about an identifier - e.g., its lexeme, its type, and the location at which it is first found (in case an error message about that identifier must be issued) - is kept in the symbol table. Thus, the appropriate attribute value for an identifier is a pointer to the symbol-table entry for that identifier.

Example : The token names and associated attribute values for the Fortran statement

are written below as a sequence of pairs.

<id, pointer to symbol-table entry for E>

< assign-op >

<id, pointer to symbol-table entry for M>

<mult -op>

<id, pointer to symbol-table entry for C>

<exp-op>

<number , integer value 2 >

Note that in certain pairs, especially operators, punctuation, and keywords, there is no need for an attribute value. In this example, the token number has been given an integer-valued attribute. In practice, a typical compiler would instead store a character string representing the constant and use as an attribute value for number a pointer to that string.

SPECIFICATION OF TOKENS :

Regular languages are an important notation for specifying lexeme patterns

Strings and Languages:

Consider the following example

stmt + if expr then stmt

I if expr then stmt else stmt

I E.

expr + term relop term

I term

term + id

I number

This syntax is similar to that of the language Pascal, in that then appears explicitly after conditions.

For relop, we use the comparison operators of languages like Pascal or SQL, where = is "equals" and <> is "not equals," because it presents an interesting structure of lexemes. The terminals of the grammar, which are if, then, else, relop, id, and number, are the names of tokens as far as the lexical analyzer is concerned. The patterns for these tokens are described using regular definitions

For this language, the lexical analyzer will recognize the keywords i f , then, and else, as well as lexemes that match the patterns for relop, id, and number. To simplify matters, we make the common assumption that keywords are also reserved words: that is, they are not identifiers, even though their lexemes match the pattern for identifiers. In addition, we assign the lexical analyzer the job of stripping out whitespace, by recognizing the "token" ws defined by:

ws -+ ( blank I tab ( newline )+

Here, blank, tab, and newline are abstract symbols that we use to express the ASCII characters of the same names. Token ws is different from the other tokens in that, when we recognize it, we do not return it to the parser, but rather restart the lexical analysis from the character that follows the whitespace. It is the following token that gets returned to the parser.

ISSUES IN LEXICAL ANALYSIS:

1. Simplicity of design : It is the most important consideration. The separation of lexical and syntactic analysis often allows us to simplify at least one of these tasks. For example, a parser that had to deal with comments

and whitespace as syntactic units would be considerably more complex than one that can assume comments and whitespace have already been removed by the lexical analyzer. If we are designing a new language, separating lexical and syntactic concerns can lead to a cleaner overall language design.

2. Compiler efficiency is improved: A separate lexical analyzer allows us to apply specialized techniques that serve only the lexical task, not the job of parsing. In addition, specialized buffering techniques for reading input

characters can speed up the compiler significantly.

3. Compiler portability is enhanced: Input-device-specific peculiarities can be restricted to the lexical analyzer.

Transition Diagram for relational operators:

Transition Diagram for unsigned numbers:

10. Compare NFA and DFA. Construct DFA directly from augmented regular expression((ε/a)b*)*. Nov/Dec 2012

11. Draw DFA for the augmented regular expression((a/b)*# directly using syntax tree. Nov/Dec 2013

Source: http://www.snscourseware.org/snsct/files/CW_588c466ddccdb/PCD%20Univ%20QB%20-%20unit_1.doc

Web site to visit: http://www.snscourseware.org

Author of the text: indicated on the source document of the above text

If you are the author of the text above and you not agree to share your knowledge for teaching, research, scholarship (for fair use as indicated in the United States copyrigh low) please send us an e-mail and we will remove your text quickly. Fair use is a limitation and exception to the exclusive right granted by copyright law to the author of a creative work. In United States copyright law, fair use is a doctrine that permits limited use of copyrighted material without acquiring permission from the rights holders. Examples of fair use include commentary, search engines, criticism, news reporting, research, teaching, library archiving and scholarship. It provides for the legal, unlicensed citation or incorporation of copyrighted material in another author's work under a four-factor balancing test. (source: http://en.wikipedia.org/wiki/Fair_use)

The information of medicine and health contained in the site are of a general nature and purpose which is purely informative and for this reason may not replace in any case, the council of a doctor or a qualified entity legally to the profession.

The texts are the property of their respective authors and we thank them for giving us the opportunity to share for free to students, teachers and users of the Web their texts will used only for illustrative educational and scientific purposes only.

All the information in our site are given for nonprofit educational purposes