COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

Supervised learning is a machine learning technique for learning a function from training data. The training data consist of pairs of input objects (typically vectors), and desired outputs.

(e.g. case based reasoning)

Reinforcement learning is a sub-area of machine learning concerned with how an agent ought to take

actions in an environment so as to maximize some notion of long-term reward.

Reinforcement learning algorithms attempt to find a policy that maps states of the world to the actions the agent ought to take in those states (i.e. typically modeled in finite state Markov decision process (MDP))

Unsupervised learning is a class of problems in which one seeks to determine how the data are organised. It is distinguished from supervised learning (and reinforcement learning) in that the learner is given only unlabeled examples

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

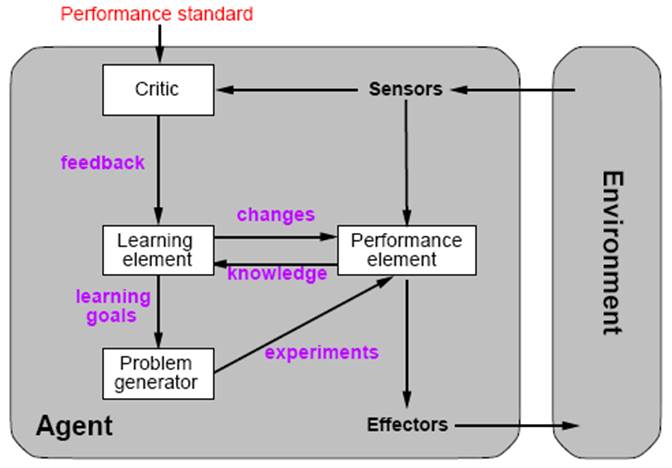

The Four Components of Learning Systems (cont.)

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

(Outlook = Sunny Ù Humidity = Normal) Ú (Outlook = Overcast) Ú (Outlook = Rain Ù Wind = Weak)

[picture from: Tom M. Mitchell, Machine Learning, McGraw-Hill, 1997]

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

This decision tree was created using the following training examples:

Day |

Outlook |

Temperature |

Humidity |

Wind |

PlayTennis |

D1 |

Sunny |

Hot |

High |

Weak |

No |

D2 |

Sunny |

Hot |

High |

Strong |

No |

D3 |

Overcast |

Hot |

High |

Weak |

Yes |

D4 |

Rain |

Mild |

High |

Weak |

Yes |

D5 |

Rain |

Cool |

Normal |

Weak |

Yes |

D6 |

Rain |

Cool |

Normal |

Strong |

No |

D7 |

Overcast |

Cool |

Normal |

Strong |

Yes |

D8 |

Sunny |

Mild |

High |

Weak |

No |

D9 |

Sunny |

Cool |

Normal |

Weak |

Yes |

D10 |

Rain |

Mild |

Normal |

Weak |

Yes |

D11 |

Sunny |

Mild |

Normal |

Strong |

Yes |

D12 |

Overcast |

Mild |

High |

Strong |

Yes |

D13 |

Overcast |

Hot |

Normal |

Weak |

Yes |

D14 |

Rain |

Mild |

High |

Strong |

No |

[Table from: Tom M. Mitchell, Machine Learning, McGraw-Hill, 1997]

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

In building a decision tree we:

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

Determining which attribute is best (Entropy & Gain)

COMP-6600/7600: Artificial & Computational Intelligence

(Learning from Observations)

Let Try an Example!

Overfitting

In decision tree learning (given a large number of training examples) it is possible to “overfit” the training set. … Finding meaningless regularity in the data. p662 Overfitting occurs with the target function is not at all random.

At the onset of overfitting, the accuracy of the “growing” tree continues to increase (on the training set) but in actuality the tree (as successive trees) begin to lose their ability to generalize beyond the training set.

Overfitting can be eliminated or reduced by using:

Preventing recursive splitting on attributes that are clearly not relevant even when the data at that node in the tree is not uniformly classified.

Try to estimate how well the current hypothesis will predict unseen data.

This is done by setting aside some fraction of the known data and using it to test the prediction performance of a hypothesis induced from the remaining data

k-fold cross validation means run k experiments each time setting aside a different 1/k of the data to test on, and average the results

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

Decision Tree learning can be extended to deal with:

In many domains not all attribute values will be known

We could add an attribute Restaurant Name, with different value for every example

If we are trying to predict a numerical value such as the price of a work of art, rather than a discrete classification, then we need a regressions tree.

Each leaf has a subset of numerical attributes, rather than a single value.

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

Concept Learning

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

Consider the following set of training examples:

height |

attitude |

KOG |

scoring ability |

defensive ability |

Great Player |

tall |

good |

good |

high |

good |

yes |

short |

bad |

good |

low |

poor |

no |

tall |

good |

good |

low |

good |

yes |

tall |

good |

poor |

high |

poor |

no |

short |

good |

poor |

low |

good |

yes |

One hypothesis that works is:

<?,good,?,?,good>

Are there any other hypothesis that will work? Are they more or less general?

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

The Find-S Algorithm

Step1: Initialize S to the most specific hypothesis

Step2: For each positive training example x

For each value ai assigned to an attribute

If ai is different than the corresponding value in x Then

Replace ai with next most general value

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

The Find-G Algorithm

Step1: Initialize G to the most general hypothesis in the hypothesis-space

Step2: If x is negative Then

Else

Remove from G all hypotheses that do not match x

COMP-4640: Intelligent & Interactive Systems

(Learning from Observations)

Candidate Elimination Algorithm

Step 1: Initialize G to the set of most general hypotheses

Initialize S to the set of most specific hypotheses

Step 2: For each training example, x,

If x is positive Then

Else

Source: http://www.eng.auburn.edu/~sealscd/chapter18.doc

Web site to visit: http://www.eng.auburn.edu

Author of the text: indicated on the source document of the above text

If you are the author of the text above and you not agree to share your knowledge for teaching, research, scholarship (for fair use as indicated in the United States copyrigh low) please send us an e-mail and we will remove your text quickly. Fair use is a limitation and exception to the exclusive right granted by copyright law to the author of a creative work. In United States copyright law, fair use is a doctrine that permits limited use of copyrighted material without acquiring permission from the rights holders. Examples of fair use include commentary, search engines, criticism, news reporting, research, teaching, library archiving and scholarship. It provides for the legal, unlicensed citation or incorporation of copyrighted material in another author's work under a four-factor balancing test. (source: http://en.wikipedia.org/wiki/Fair_use)

The information of medicine and health contained in the site are of a general nature and purpose which is purely informative and for this reason may not replace in any case, the council of a doctor or a qualified entity legally to the profession.

The texts are the property of their respective authors and we thank them for giving us the opportunity to share for free to students, teachers and users of the Web their texts will used only for illustrative educational and scientific purposes only.

All the information in our site are given for nonprofit educational purposes